As artificial intelligence matures and expands across industries, the conversation has shifted from “Can we do this?” to “How do we do it responsibly?”

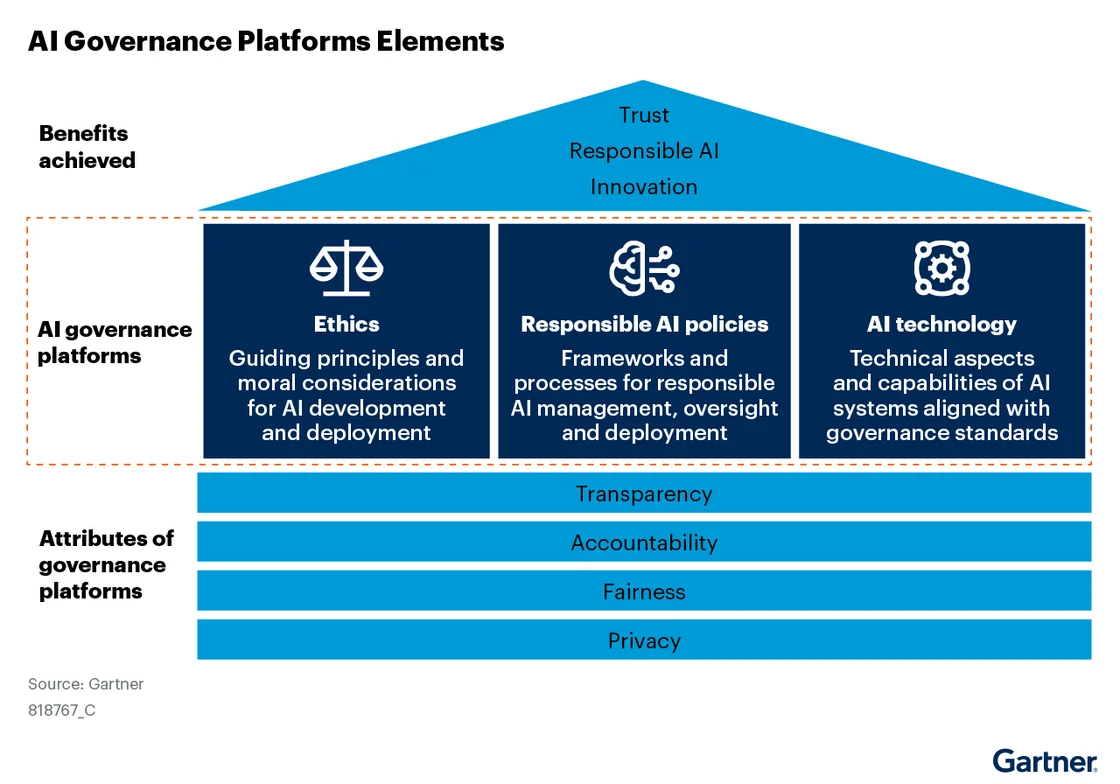

The answer lies in AI governance: the frameworks, policies, and tools that ensure AI systems are fair, transparent, and aligned with human values.

The Rulebook for Responsible AI

AI governance is often described as a “rulebook” for AI development and deployment.

This post breaks it down into three key chapters: Ethics, Policy, and Testing which are the foundational pillars of any successful AI governance strategy.

Chapter 1. Ethics: The Foundation of Responsible AI

Before a single model is built, ethical principles must be clearly defined. These principles act as the compass that guides AI strategy, development, and deployment.

Key components of ethical AI include:

- Fairness – Proactively addressing bias to ensure equitable outcomes.

- Accountability – Establishing who is responsible for AI decisions and outcomes.

- Transparency – Making systems explainable and accessible to users and regulators.

- Privacy – Safeguarding personal data at every stage.

- Data Responsibility – Ensuring data is accurate, relevant, and handled with integrity.

When these principles are front-loaded into the AI lifecycle, they create a foundation for trust both within the organization and with its users.

Chapter 2. Responsible AI Policies: Turning Ethics into Action

Ethics on its own isn’t enough. Organizations need defined Responsible AI policies to operationalize their values.

Examples of responsible AI policies include:

- Model cards – Documentation that details a model’s purpose, limitations, and fairness assessments.

- Guardrails for generative AI – Safeguards that prevent harmful or biased outputs.

- Human-in-the-loop systems – Ensuring AI augments, rather than replaces, human decision-making.

These policies create repeatable, scalable standards that teams can follow which is especially important in large enterprises with various AI initiatives.

Chapter 3. AI Technology: Testing for Trustworthiness

Governance doesn’t stop at launch. Ongoing testing, monitoring, and validation are essential to ensure AI performs as intended and remains compliant as regulations evolve.

Some organizations use custom-built testing tools, while others rely on industry standards like John Snow Labs’ LangTest, which provides open-source evaluations for fairness, bias, and accuracy. At NLP Logix, we have implemented John Snow’s LangTest in an AI model for talent acquisition which has been certified as non-biased ensuring fairness and equality in recruitment.

Governance Beyond Compliance

While regulation is a driving force, governance should be viewed as a strategic asset.

As AI regulations continue to emerge globally, staying compliant is no small task which is where companies like Pacific AI will be playing a critical role. Pacific AI offers a free AI Policy Suite and an AI Governance Certification to guarantee responsible use of AI in accordance with more than 80 AI-related laws, regulations, and standards across national, state and international jurisdictions.

Organizations that proactively invest in governance now are better equipped to navigate those concerns, build customer trust, and unlock AI’s full potential.

AI governance is no longer optional, it’s foundational. Whether you’re deploying your first AI model or scaling enterprise-wide systems, governance ensures that your innovations are sustainable, compliant, and trusted.

Learn more about how to navigate and incorporate responsible AI in your business needs by contacting our team of experts.